SCS

0.0200

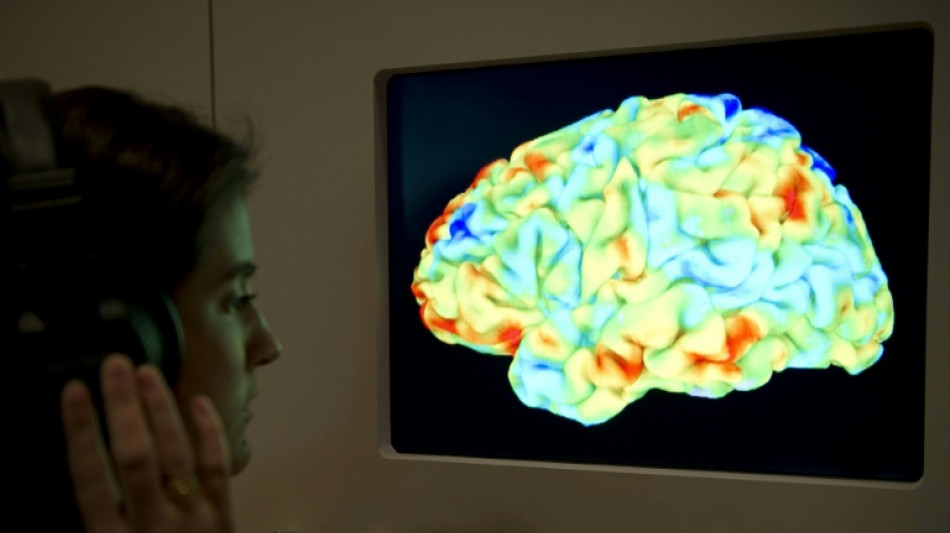

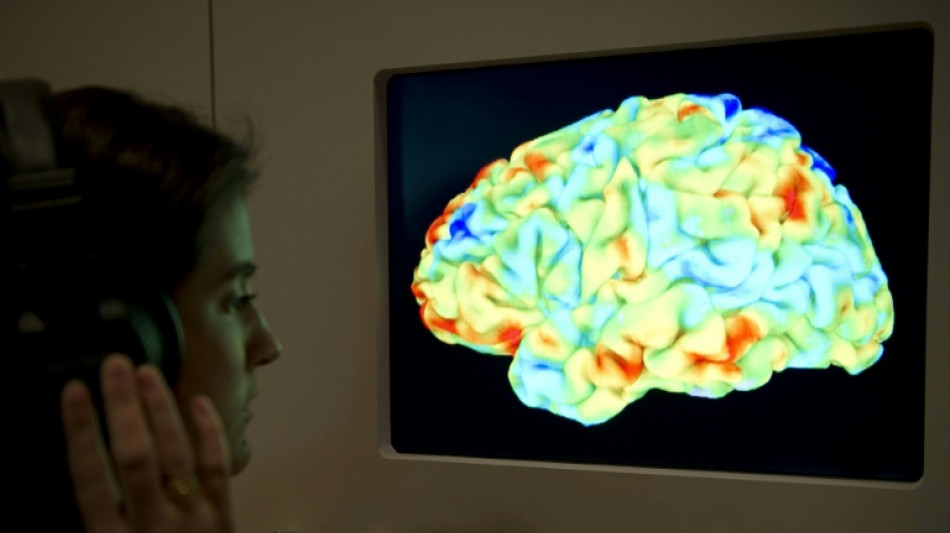

Scientists said Monday they have found a way to use brain scans and artificial intelligence modelling to transcribe "the gist" of what people are thinking, in what was described as a step towards mind reading.

While the main goal of the language decoder is to help people who have the lost the ability to communicate, the US scientists acknowledged that the technology raised questions about "mental privacy".

Aiming to assuage such fears, they ran tests showing that their decoder could not be used on anyone who had not allowed it to be trained on their brain activity over long hours inside a functional magnetic resonance imaging (fMRI) scanner.

Previous research has shown that a brain implant can enable people who can no longer speak or type to spell out words or even sentences.

These "brain-computer interfaces" focus on the part of the brain that controls the mouth when it tries to form words.

Alexander Huth, a neuroscientist at the University of Texas at Austin and co-author of a new study, said that his team's language decoder "works at a very different level".

"Our system really works at the level of ideas, of semantics, of meaning," Huth told an online press conference.

It is the first system to be able to reconstruct continuous language without an invasive brain implant, according to the study in the journal Nature Neuroscience.

- 'Deeper than language' -

For the study, three people spent a total of 16 hours inside an fMRI machine listening to spoken narrative stories, mostly podcasts such as the New York Times' Modern Love.

This allowed the researchers to map out how words, phrases and meanings prompted responses in the regions of the brain known to process language.

They fed this data into a neural network language model that uses GPT-1, the predecessor of the AI technology later deployed in the hugely popular ChatGPT.

The model was trained to predict how each person's brain would respond to perceived speech, then narrow down the options until it found the closest response.

To test the model's accuracy, each participant then listened to a new story in the fMRI machine.

The study's first author Jerry Tang said the decoder could "recover the gist of what the user was hearing".

For example, when the participant heard the phrase "I don't have my driver's license yet", the model came back with "she has not even started to learn to drive yet".

The decoder struggled with personal pronouns such as "I" or "she," the researchers admitted.

But even when the participants thought up their own stories -- or viewed silent movies -- the decoder was still able to grasp the "gist," they said.

This showed that "we are decoding something that is deeper than language, then converting it into language," Huth said.

Because fMRI scanning is too slow to capture individual words, it collects a "mishmash, an agglomeration of information over a few seconds," Huth said.

"So we can see how the idea evolves, even though the exact words get lost."

- Ethical warning -

David Rodriguez-Arias Vailhen, a bioethics professor at Spain's Granada University not involved in the research, said it went beyond what had been achieved by previous brain-computer interfaces.

This brings us closer to a future in which machines are "able to read minds and transcribe thought," he said, warning this could possibly take place against people's will, such as when they are sleeping.

The researchers anticipated such concerns.

They ran tests showing that the decoder did not work on a person if it had not already been trained on their own particular brain activity.

The three participants were also able to easily foil the decoder.

While listening to one of the podcasts, the users were told to count by sevens, name and imagine animals or tell a different story in their mind. All these tactics "sabotaged" the decoder, the researchers said.

Next, the team hopes to speed up the process so that they can decode the brain scans in real time.

They also called for regulations to protect mental privacy.

"Our mind has so far been the guardian of our privacy," said bioethicist Rodriguez-Arias Vailhen.

"This discovery could be a first step towards compromising that freedom in the future."

T.Gilbert--TFWP